Amazon Web Services, Inc. (AWS), an Amazon.com, Inc. company, has announced five generative artificial intelligence (AI) innovations, so organisations of all sizes can build new generative AI applications, enhance employee productivity, and transform their businesses.

The announcement includes the general availability of Amazon Bedrock, a fully managed service that makes foundation models (FMs) from leading AI companies available through a single application programming interface (API). To give customers an even greater choice of FMs, AWS also announced that Amazon Titan Embeddings model is generally available and that Llama 2 will be available as a new model on Amazon Bedrock–making it the first fully managed service to offer Meta’s Llama 2 via an API.

For organisations that want to maximise the value their developers derive from generative AI, AWS is also announcing a new capability (available soon in preview) for Amazon CodeWhisperer, AWS’s AI-powered coding companion, that securely customizes CodeWhisperer’s code suggestions based on an organisation’s own internal codebase. To increase the productivity of business analysts, AWS is releasing a preview of Generative Business Intelligence (BI) authoring capabilities for Amazon QuickSight, a unified BI service built for the cloud, so customers can create compelling visuals, format charts, perform calculations, and more–all by simply describing what they want in natural language.

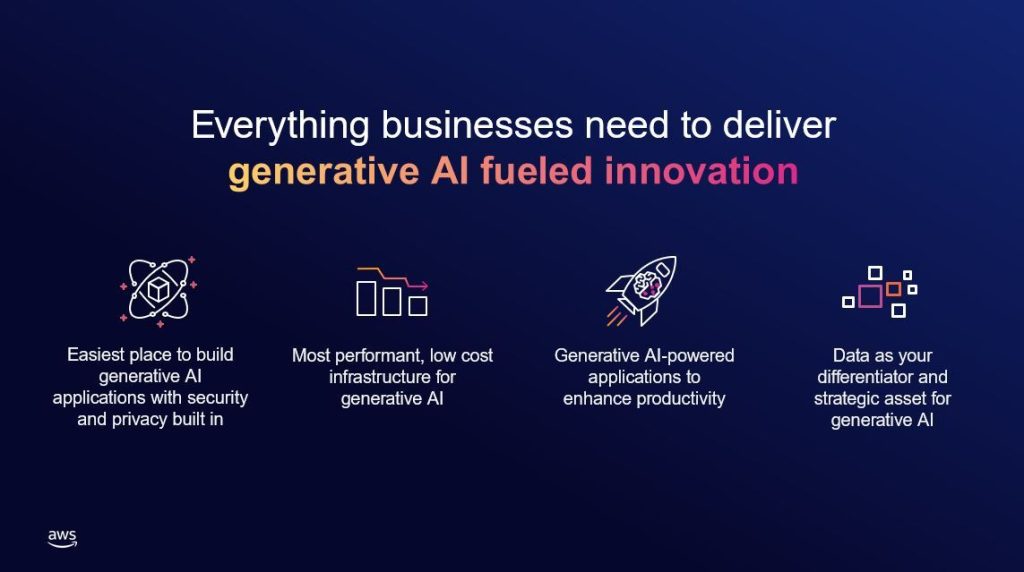

From Amazon Bedrock and Amazon Titan Embeddings to CodeWhisperer and QuickSight, these innovations add to the capabilities AWS provides customers at all layers of the generative AI stack—for organizations of all sizes and with enterprise-grade security and privacy, selection of best-in-class models, and powerful model customization capabilities.

To get started with generative AI on AWS, visit aws.amazon.com/generative-ai/.

Reimagining Old Paradigms and Ushering in New Ones with Generative AI

Organisations of all sizes and across industries want to get started with generative AI to transform their operations, reimagine how they solve tough problems, and create new user experiences. While recent advancements in generative AI have captured widespread attention, many businesses have not been able to take part in this transformation.

These organisations want to get started with generative AI, but they are concerned about the security and privacy of these tools. They also want the ability to choose from a wide variety of FMs, so they can test different models to determine which works best for their unique use case. Customers also want to make the most of the data they already have by privately customising models to create differentiated experiences for their end users. Finally, they need tools that help them bring these new innovations to market quickly and the infrastructure to deploy their generative AI applications on a global scale.

That is why customers such as adidas, Alida, Asurion, BMW Group, Clariant, Genesys, Glide, GoDaddy, Intuit, LexisNexis Legal & Professional, Lonely Planet, Merck, NatWest Group, Perplexity AI, Persistent, Quext, RareJob Technologies, Rocket Mortgage, SnapLogic, Takenaka Corporation, Traeger Grills, the PGA TOUR, United Airlines, Verint, Verisk, WPS, and more have turned to AWS for generative AI.

Amazon Bedrock: Helping More Customers Build and Scale Generative AI Applications

Amazon Bedrock is a fully managed service that offers a choice of high-performing FMs from leading AI companies including AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon, along with a broad set of capabilities that customers need to build generative AI applications, simplifying development while maintaining privacy and security.

The flexibility of FMs makes them applicable to a wide range of use cases, powering everything from search to content creation to drug discovery. However, a few things stand in the way of most businesses looking to adopt generative AI.

First, they need a straightforward way to find and access high-performing FMs that give outstanding results and are best-suited to their purposes. Second, customers want application integration to be seamless, without managing huge clusters of infrastructure or incurring large costs. Finally, customers want easy ways to use the base FM and build differentiated apps with their data.

Since the data customers want for customization is incredibly valuable IP, it must stay completely protected, secure, and private during that process, and customers want control over how the data is shared and used.

With Amazon Bedrock’s comprehensive capabilities, customers can easily experiment with a variety of top FMs and customise them privately with their proprietary data. Additionally, Amazon Bedrock offers differentiated capabilities like creating managed agents that execute complex business tasks—from booking travel and processing insurance claims to creating ad campaigns and managing inventory—without writing any code. S

Amazon Titan Embeddings and Llama 2: Helping Customers Find the Right Model for Their Use Case

No single model is optimised for every use case, and to unlock the value of generative AI, customers need access to a variety of models to discover what works best based on their needs. That is why Amazon Bedrock makes it easy for customers to find and test a selection of leading FMs, including models from AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon, through a single API.

Additionally, as part of a recently announced strategic collaboration, all future FMs from Anthropic will be available within Amazon Bedrock with early access to unique features for model customization and fine-tuning capabilities. With today’s announcement, Amazon Bedrock continues to broaden its selection of FMs with access to new models:

- Amazon Titan Embeddings now generally available. Amazon Titan FMs are a family of models created and pretrained by AWS on large datasets, making them powerful, general-purpose capabilities to support a variety of use cases.

- Llama 2 coming in the next few weeks. Amazon Bedrock is the first fully managed generative AI service to offer Llama 2, Meta’s next-generation LLM, through a managed API. Llama 2 models come with significant improvements over the original Llama models, including being trained on 40% more data and having a longer context length of 4,000 tokens to work with larger documents.

New Amazon CodeWhisperer Capability Will Allow Secure Customisations

Trained on billions of lines of Amazon and publicly available code, Amazon CodeWhisperer is an AI-powered coding companion that improves developer productivity. While developers frequently use CodeWhisperer for day-to-day work, they sometimes need to incorporate their organisation’s internal, private codebase (e.g., internal APIs, libraries, packages, and classes) into an application, none of which are included in CodeWhisperer’s training data.

However, internal code can be difficult to work with because documentation may be limited, and there are no public resources or forums where developers can ask for help. For example, to write a function for an ecommerce website that removes an item from a shopping cart, a developer must first understand the existing APIs, classes, and other internal code used to interact with the application.

Amazon CodeWhisperer’s new customization capability will unlock the full potential of generative AI-powered coding by securely leveraging a customer’s internal codebase and resources to provide recommendations that are customized to their unique requirements. Developers save time through improved relevancy of code suggestions across a range of tasks.

New Generative BI Authoring Capabilities in Amazon QuickSight

Amazon QuickSight is a unified business intelligence (BI) service built for the cloud that offers interactive dashboards, paginated reports, and embedded analytics, plus natural-language querying capabilities using QuickSight Q, so every user in the organisation can access insights they need in the format they prefer. Business analysts often spend hours with BI tools exploring disparate data sources, adding calculations, and creating and refining visualizations before providing them in dashboards to business stakeholders.

To create a single chart, an analyst must first find the correct data source, identify the data fields, set up filters, and make necessary customisations to ensure the visual is compelling. If the visual requires a new calculation (e.g., year-to-date sales), the analyst must identify the necessary reference data and then create, verify, and add the visual to the report. Organisations would benefit from reducing the time that business analysts spend manually creating and adjusting charts and calculations so that they can devote more time to higher-value tasks.

(0)

(0) (0)

(0)Archive

- October 2024(44)

- September 2024(94)

- August 2024(100)

- July 2024(99)

- June 2024(126)

- May 2024(155)

- April 2024(123)

- March 2024(112)

- February 2024(109)

- January 2024(95)

- December 2023(56)

- November 2023(86)

- October 2023(97)

- September 2023(89)

- August 2023(101)

- July 2023(104)

- June 2023(113)

- May 2023(103)

- April 2023(93)

- March 2023(129)

- February 2023(77)

- January 2023(91)

- December 2022(90)

- November 2022(125)

- October 2022(117)

- September 2022(137)

- August 2022(119)

- July 2022(99)

- June 2022(128)

- May 2022(112)

- April 2022(108)

- March 2022(121)

- February 2022(93)

- January 2022(110)

- December 2021(92)

- November 2021(107)

- October 2021(101)

- September 2021(81)

- August 2021(74)

- July 2021(78)

- June 2021(92)

- May 2021(67)

- April 2021(79)

- March 2021(79)

- February 2021(58)

- January 2021(55)

- December 2020(56)

- November 2020(59)

- October 2020(78)

- September 2020(72)

- August 2020(64)

- July 2020(71)

- June 2020(74)

- May 2020(50)

- April 2020(71)

- March 2020(71)

- February 2020(58)

- January 2020(62)

- December 2019(57)

- November 2019(64)

- October 2019(25)

- September 2019(24)

- August 2019(14)

- July 2019(23)

- June 2019(54)

- May 2019(82)

- April 2019(76)

- March 2019(71)

- February 2019(67)

- January 2019(75)

- December 2018(44)

- November 2018(47)

- October 2018(74)

- September 2018(54)

- August 2018(61)

- July 2018(72)

- June 2018(62)

- May 2018(62)

- April 2018(73)

- March 2018(76)

- February 2018(8)

- January 2018(7)

- December 2017(6)

- November 2017(8)

- October 2017(3)

- September 2017(4)

- August 2017(4)

- July 2017(2)

- June 2017(5)

- May 2017(6)

- April 2017(11)

- March 2017(8)

- February 2017(16)

- January 2017(10)

- December 2016(12)

- November 2016(20)

- October 2016(7)

- September 2016(102)

- August 2016(168)

- July 2016(141)

- June 2016(149)

- May 2016(117)

- April 2016(59)

- March 2016(85)

- February 2016(153)

- December 2015(150)