It is official: NVIDIA Hopper is the world’s fastest platform in industry-standard tests for inference on generative AI.

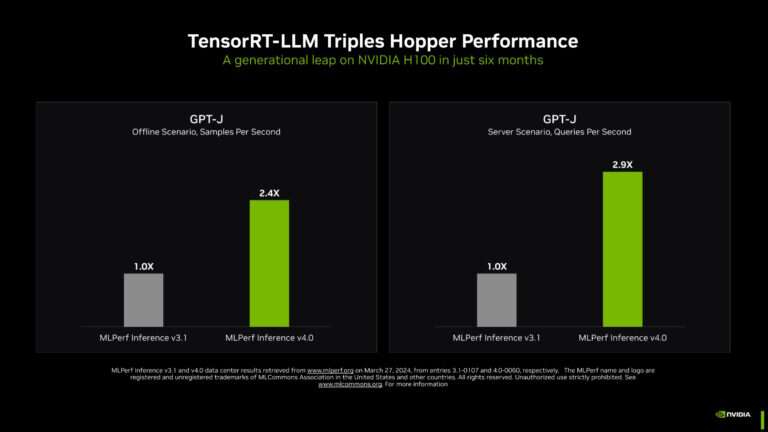

In the latest MLPerf benchmarks, NVIDIA TensorRT-LLM—software that speeds and simplifies the complex job of inference on large language models—boosted the performance of NVIDIA Hopper architecture GPUs on the GPT-J LLM nearly 3x over their results just six months ago.

The dramatic speedup demonstrates the power of NVIDIA’s full-stack platform of chips, systems, and software to handle the demanding requirements of running generative AI.

Leading companies are using TensorRT-LLM to optimise their models. NVIDIA NIM—a set of inference microservices that includes inferencing engines like TensorRT-LLM—makes it easier than ever for businesses to deploy NVIDIA’s inference platform.

NVIDIA Hopper: Raising the Bar in Generative AI

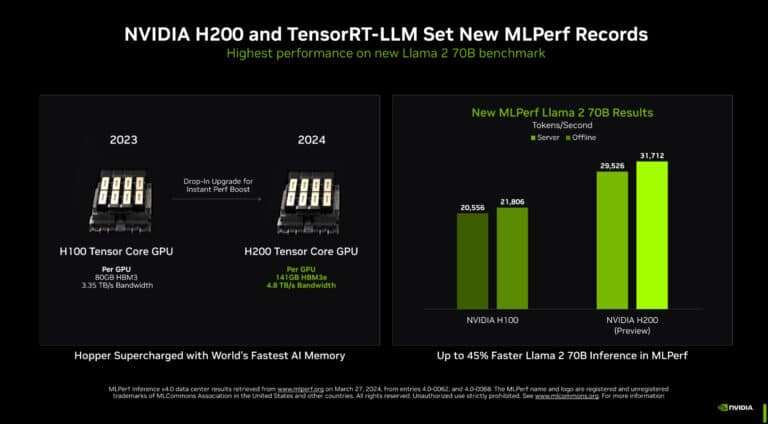

TensorRT-LLM running on NVIDIA H200 Tensor Core GPUs—the latest, memory-enhanced NVIDIA Hopper GPUs—delivered the fastest performance running inference in MLPerf’s biggest test of generative AI to date.

The new benchmark uses the largest version of Llama 2, a state-of-the-art large language model packing 70 billion parameters. The model is more than 10x larger than the GPT-J LLM first used in the September benchmarks.

The memory-enhanced H200 GPUs, in their MLPerf debut, used TensorRT-LLM to produce up to 31,000 tokens/second, a record on MLPerf’s Llama 2 benchmark.

The H200 GPU results include up to 14% gains from a custom thermal solution. It is one example of innovations beyond standard air cooling that systems builders are applying to their NVIDIA MGX designs to take the performance of Hopper GPUs to new heights.

Memory Boost for NVIDIA Hopper GPUs

NVIDIA is sampling H200 GPUs to customers today and shipping in the second quarter. They will be available soon from nearly 20 leading system builders and cloud service providers.

H200 GPUs pack 141GB of HBM3e running at 4.8TB/s. That is 76% more memory flying 43% faster compared to H100 GPUs. These accelerators plug into the same boards and systems and use the same software as H100 GPUs.

With HBM3e memory, a single H200 GPU can run an entire Llama 2 70B model with the highest throughput, simplifying and speeding inference.

GH200 Packs Even More Memory

Even more memory—up to 624GB of fast memory, including 144GB of HBM3e—is packed in NVIDIA GH200 Superchips, which combine in one module an NVIDIA Hopper architecture GPU and a power-efficient NVIDIA Grace CPU. NVIDIA accelerators are the first to use HBM3e memory technology.

With nearly 5 TB/second memory bandwidth, GH200 Superchips delivered standout performance, including on memory-intensive MLPerf tests such as recommender systems.

Sweeping Every MLPerf Test

On a per-accelerator basis, NVIDIA Hopper GPUs swept every test of AI inference in the latest round of the MLPerf industry benchmarks.

In addition, NVIDIA Jetson Orin remains at the forefront in MLPerf’s edge category. In the last two inference rounds, Orin ran the most diverse set of models in the category, including GPT-J and Stable Diffusion XL.

The MLPerf benchmarks cover today’s most popular AI workloads and scenarios, including generative AI, recommendation systems, natural language processing, speech, and computer vision. NVIDIA was the only company to submit results on every workload in the latest round and every round since MLPerf’s data center inference benchmarks began in October 2020.

Continued performance gains translate into lower costs for inference, a large and growing part of the daily work for the millions of NVIDIA GPUs deployed worldwide.

Advancing What’s Possible

Pushing the boundaries of what’s possible, NVIDIA demonstrated three innovative techniques in a special section of the benchmarks called the open division, created for testing advanced AI methods.

NVIDIA engineers used a technique called structured sparsity—a way of reducing calculations, first introduced with NVIDIA A100 Tensor Core GPUs—to deliver up to 33% speedups on inference with Llama 2.

A second open division test found inference speedups of up to 40% using pruning, a way of simplifying an AI model—in this case, an LLM—to increase inference throughput.

Finally, an optimisation called DeepCache reduced the math required for inference with the Stable Diffusion XL model, accelerating performance by a whopping 74%.

All these results were run on NVIDIA H100 Tensor Core GPUs.

A Trusted Source for Users

MLPerf’s tests are transparent and objective, so users can rely on the results to make informed buying decisions.

NVIDIA’s partners participate in MLPerf because they know it is a valuable tool for customers evaluating AI systems and services.

Partners submitting results on the NVIDIA AI platform in this round included ASUS, Cisco, Dell Technologies, Fujitsu, GIGABYTE, Google, Hewlett Packard Enterprise, Lenovo, Microsoft Azure, Oracle, QCT, Supermicro, VMware (recently acquired by Broadcom), and Wiwynn.

All the software NVIDIA used in the tests is available in the MLPerf repository. These optimisations are continuously folded into containers available on NGC, NVIDIA’s software hub for GPU applications, as well as NVIDIA AI Enterprise—a secure, supported platform that includes NIM inference microservices.

The Next Big Thing

The use cases, model sizes and datasets for generative AI continue to expand. That is why MLPerf continues to evolve, adding real-world tests with popular models like Llama 2 70B and Stable Diffusion XL.

Keeping pace with the explosion in LLM model sizes, NVIDIA founder and CEO Jensen Huang announced at GTC that the NVIDIA Blackwell architecture GPUs will deliver new levels of performance required for the multitrillion-parameter AI models.

Inference for large language models is difficult, requiring both expertise and the full-stack architecture NVIDIA demonstrated on MLPerf with Hopper architecture GPUs and TensorRT-LLM. There is much more to come.

Learn more about MLPerf benchmarks and the technical details of this inference round.

(0)

(0) (0)

(0)Archive

- October 2024(44)

- September 2024(94)

- August 2024(100)

- July 2024(99)

- June 2024(126)

- May 2024(155)

- April 2024(123)

- March 2024(112)

- February 2024(109)

- January 2024(95)

- December 2023(56)

- November 2023(86)

- October 2023(97)

- September 2023(89)

- August 2023(101)

- July 2023(104)

- June 2023(113)

- May 2023(103)

- April 2023(93)

- March 2023(129)

- February 2023(77)

- January 2023(91)

- December 2022(90)

- November 2022(125)

- October 2022(117)

- September 2022(137)

- August 2022(119)

- July 2022(99)

- June 2022(128)

- May 2022(112)

- April 2022(108)

- March 2022(121)

- February 2022(93)

- January 2022(110)

- December 2021(92)

- November 2021(107)

- October 2021(101)

- September 2021(81)

- August 2021(74)

- July 2021(78)

- June 2021(92)

- May 2021(67)

- April 2021(79)

- March 2021(79)

- February 2021(58)

- January 2021(55)

- December 2020(56)

- November 2020(59)

- October 2020(78)

- September 2020(72)

- August 2020(64)

- July 2020(71)

- June 2020(74)

- May 2020(50)

- April 2020(71)

- March 2020(71)

- February 2020(58)

- January 2020(62)

- December 2019(57)

- November 2019(64)

- October 2019(25)

- September 2019(24)

- August 2019(14)

- July 2019(23)

- June 2019(54)

- May 2019(82)

- April 2019(76)

- March 2019(71)

- February 2019(67)

- January 2019(75)

- December 2018(44)

- November 2018(47)

- October 2018(74)

- September 2018(54)

- August 2018(61)

- July 2018(72)

- June 2018(62)

- May 2018(62)

- April 2018(73)

- March 2018(76)

- February 2018(8)

- January 2018(7)

- December 2017(6)

- November 2017(8)

- October 2017(3)

- September 2017(4)

- August 2017(4)

- July 2017(2)

- June 2017(5)

- May 2017(6)

- April 2017(11)

- March 2017(8)

- February 2017(16)

- January 2017(10)

- December 2016(12)

- November 2016(20)

- October 2016(7)

- September 2016(102)

- August 2016(168)

- July 2016(141)

- June 2016(149)

- May 2016(117)

- April 2016(59)

- March 2016(85)

- February 2016(153)

- December 2015(150)