Red Hat, Inc., the world’s leading provider of open source solutions, announced advances in Red Hat OpenShift AI, an open hybrid Artificial Intelligence (AI) and Machine Learning (ML) platform built on Red Hat OpenShift that enables enterprises to create and deliver AI-enabled applications at scale across hybrid clouds.

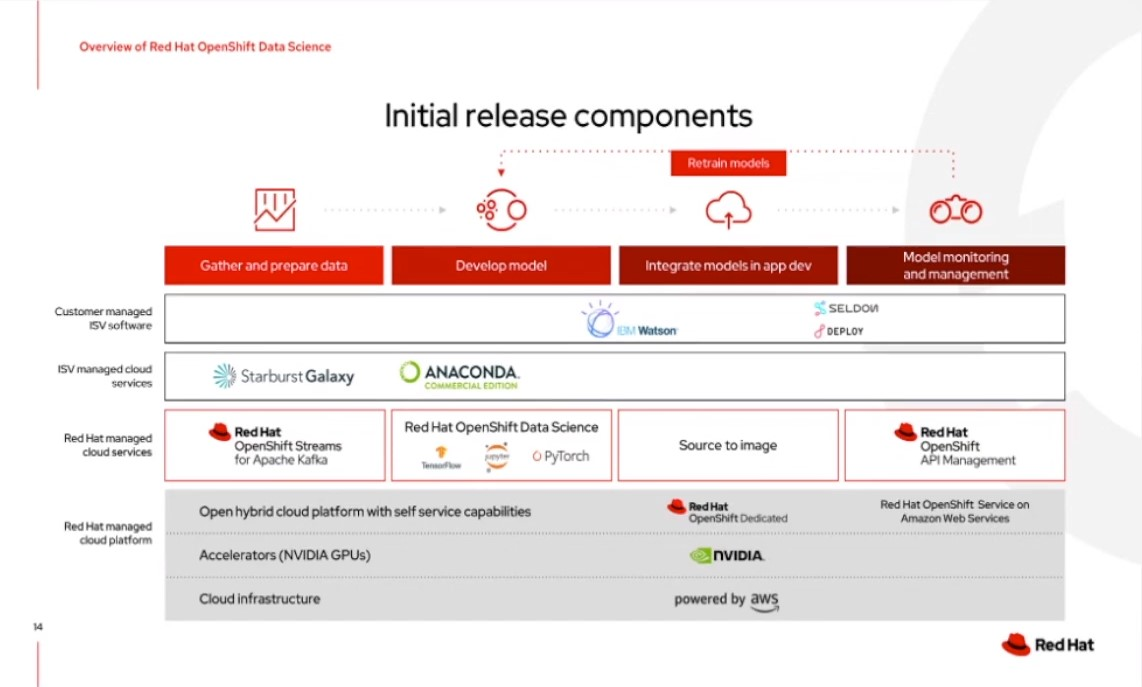

These updates highlight Red Hat’s vision for AI, bringing Red Hat’s commitment to customer choice to the world of intelligent workloads, from the underlying hardware to the services and tools, such as Jupyter and PyTorch, used to build on the platform. This provides faster innovation, increased productivity, and the capacity to layer AI into daily business operations through a more flexible, scalable, and adaptable open source platform that enables both predictive and generative models, with or without the use of cloud environments.

“Bringing AI into the enterprise is no longer an ‘if,’ it’s a matter of ‘when.’ Enterprises need a more reliable, consistent, and flexible AI platform that can increase productivity, drive revenue, and fuel market differentiation,” said Ashesh Badani, Chief Product Officer and Senior Vice President at Red Hat

Red Hat’s answer for the demands of enterprise AI at scale is Red Hat OpenShift AI, making it possible for IT leaders to deploy intelligent applications anywhere across the hybrid cloud while growing and fine-tuning operations and models as needed to support the realities of production applications and services.”

Customers are facing many challenges when moving AI models from experimentation into production, including increased hardware costs, data privacy concerns, and lack of trust in sharing their data with SaaS-based models. Generative AI (GenAI) is changing rapidly, and many organisations are struggling to establish a reliable core AI platform that can run on-premise or on the cloud.

According to IDC, to successfully exploit AI, enterprises will need to modernise many existing applications and data environments, break down barriers between existing systems and storage platforms, improve infrastructure sustainabilit,y and carefully choose where to deploy different workloads across cloud, datacenter, and edge locations. To Red Hat, this shows that AI platforms must provide flexibility to support enterprises as they progress through their AI adoption journey and their needs and resources adapt.

Red Hat OpenShift AI Is Red Hat’s Latest Innovation in AI

Red Hat’s AI strategy enables flexibility across the hybrid cloud, provides the ability to enhance pre-trained or curated foundation models with their customer data, and the freedom to enable a variety of hardware and software accelerators. Red Hat OpenShift AI’s new and enhanced features deliver on these needs through access to the latest AI/ML innovations and support from an expansive AI-centric partner ecosystem. The latest version of the platform, Red Hat OpenShift AI 2.9, delivers:

- Model serving at the edge extends the deployment of AI models to remote locations using single-node OpenShift. It provides inferencing capabilities in resource-constrained environments with intermittent or air-gapped network access. This technology preview feature provides organizations with a scalable, consistent operational experience from core to cloud to edge and includes out-of-the-box observability.

- Enhanced model serving with the ability to use multiple model servers to support both predictive and GenAI, including support for KServe, a Kubernetes custom resource definition that orchestrates serving for all types of models, vLLM, and text generation inference server (TGIS), serving engines for LLMs and Caikit-nlp-tgis runtime, which handles natural language processing (NLP) models and tasks. Enhanced model serving allows users to run predictive and GenAI on a single platform for multiple use cases, reducing costs and simplifying operations. This enables out-of-the-box model serving for LLMs and simplifies the surrounding user workflow.

- Distributed workloads with Ray, using CodeFlare and KubeRay, which uses multiple cluster nodes for faster, more efficient data processing and model training. Ray is a framework for accelerating AI workloads, and KubeRay helps manage these workloads on Kubernetes. CodeFlare is central to Red Hat OpenShift AI’s distributed workload capabilities, providing a user-friendly framework that helps simplify task orchestration and monitoring. The central queuing and management capabilities enable optimal node utilization, and enable the allocation of resources, such as GPUs, to the right users and workloads.

- Improved model development through project workspaces and additional workbench images that provide data scientists the flexibility to use IDEs and toolkits, including VS Code and RStudio, currently available as a technology preview, and enhanced CUDA, for a variety of use cases and model types.

- Model monitoring visualisations for performance and operational metrics, improving observability into how AI models are performing.

- New accelerator profiles enable administrators to configure different types of hardware accelerators available for model development and model-serving workflows. This provides simple, self-service user access to the appropriate accelerator type for a specific workload.

In addition to Red Hat OpenShift AI underpinning IBM’s watsonx.ai, enterprises across industries are equipping themselves with Red Hat OpenShift AI to drive more AI innovation and growth, including AGESIC and Ortec Finance.

“Red Hat’s vision for AI aligns perfectly with our goal to provide organisations with a reliable and sovereign AI solution. With Red Hat OpenShift AI, our kvant AI customers benefit from unparalleled flexibility, scalability, and security,” said Thomas Taroni, CEO at Phoenix Technologies. “With kvant AI in combination with Red Hat OpenShift AI, organisations are perfectly equipped to integrate predictive and generative models effortlessly, empowering everyone to create AI applications with confidence and agility.”

“As legacy IT models wrestle with the complexities of AI, WWT is committed to helping our clients make the right technology decisions faster when it comes to AI adoption. WWT’s AI Proving Ground is a testament to this. With Red Hat OpenShift AI as its foundational platform, the AI Proving Ground enables clients to test, train, validate, and deploy secure AI solutions that deliver real-world business value,” added Jeff Fonke, Sr. Practice Manager AI & Data at World Wide Technology Holding Co.

(0)

(0) (0)

(0)Archive

- October 2024(44)

- September 2024(94)

- August 2024(100)

- July 2024(99)

- June 2024(126)

- May 2024(155)

- April 2024(123)

- March 2024(112)

- February 2024(109)

- January 2024(95)

- December 2023(56)

- November 2023(86)

- October 2023(97)

- September 2023(89)

- August 2023(101)

- July 2023(104)

- June 2023(113)

- May 2023(103)

- April 2023(93)

- March 2023(129)

- February 2023(77)

- January 2023(91)

- December 2022(90)

- November 2022(125)

- October 2022(117)

- September 2022(137)

- August 2022(119)

- July 2022(99)

- June 2022(128)

- May 2022(112)

- April 2022(108)

- March 2022(121)

- February 2022(93)

- January 2022(110)

- December 2021(92)

- November 2021(107)

- October 2021(101)

- September 2021(81)

- August 2021(74)

- July 2021(78)

- June 2021(92)

- May 2021(67)

- April 2021(79)

- March 2021(79)

- February 2021(58)

- January 2021(55)

- December 2020(56)

- November 2020(59)

- October 2020(78)

- September 2020(72)

- August 2020(64)

- July 2020(71)

- June 2020(74)

- May 2020(50)

- April 2020(71)

- March 2020(71)

- February 2020(58)

- January 2020(62)

- December 2019(57)

- November 2019(64)

- October 2019(25)

- September 2019(24)

- August 2019(14)

- July 2019(23)

- June 2019(54)

- May 2019(82)

- April 2019(76)

- March 2019(71)

- February 2019(67)

- January 2019(75)

- December 2018(44)

- November 2018(47)

- October 2018(74)

- September 2018(54)

- August 2018(61)

- July 2018(72)

- June 2018(62)

- May 2018(62)

- April 2018(73)

- March 2018(76)

- February 2018(8)

- January 2018(7)

- December 2017(6)

- November 2017(8)

- October 2017(3)

- September 2017(4)

- August 2017(4)

- July 2017(2)

- June 2017(5)

- May 2017(6)

- April 2017(11)

- March 2017(8)

- February 2017(16)

- January 2017(10)

- December 2016(12)

- November 2016(20)

- October 2016(7)

- September 2016(102)

- August 2016(168)

- July 2016(141)

- June 2016(149)

- May 2016(117)

- April 2016(59)

- March 2016(85)

- February 2016(153)

- December 2015(150)