Written By: Martin Dale Bolima, Tech Journalist, AOPG

As we stand on the threshold of the AI era, it has become increasingly evident that we require more than just any Artificial Intelligence (AI); what’s truly imperative is responsible AI.

Yes, it’s not a typo; it’s a fundamental distinction.

Responsible AI is about more than just the technology itself—IBM defines responsible AI as “a set of principles that help guide the design, development, deployment, and use of AI,” in turn “building trust in AI solutions that have the potential to empower organisations and their stakeholders.”

IBM further states that “responsible AI involves the consideration of a broader societal impact of AI systems and the measures required to align these technologies with stakeholder values, legal standards, and ethical principles,” with the ultimate aim “to embed such ethical principles into AI applications and workflows to mitigate risks and negative outcomes associated with the use of AI, while maximising positive outcomes.”

In other words, responsible AI is all about building AI you and your customers can trust.

It’s not just about the AI we want; it’s the AI we need for a better future.

“Without responsible AI and AI governance framework, companies will not be able to adopt AI at scale. When we advance AI, we make more accessibility, and we gain competitive advantage towards adopting AI,” said Catherine Lian, General Manager and Executive Technology Leader at IBM ASEAN, at the recently held IBM ASEAN AI Masterclass: Future of Responsible AI & Governance in ASEAN. “But organisations must also weigh towards introducing rewards against investment and risks that they see—privacy, accuracy, explainability, and bias are very important as organisations are growing to adopt AI.”

AI Is a Game Changer in Business

Of course, all that is set against the backdrop of AI’s unprecedented rise as a business differentiator in this digital age, where digital technologies are now obvious necessities. And it appears AI is the mother of all innovations at the moment, with PwC predicting that it will unlock approximately USD $16 trillion—trillion!—in value by 2030.

AI is already unlocking a lot of value for some of the world’s biggest companies. In fact, according to IDC, 25% of G2000 companies credit AI capabilities for contributing +5% to their earnings, highlighting its growing prominence in the business world. And, it is only growing, with IDC also predicting that 80% of CIOs will leverage organisational changes to harness AI automation to drive agile insight-driven digital business.

But, again, it is no longer just enough to deploy AI—and, in particular, its ever-popular minion, generative AI or GenAI. That’s because inhibitors to AI adoption are aplenty, and they include:

- Data privacy concerns.

- Trust and transparency concerns.

- Minimising bias.

- Maintaining brand integrity and customer trust.

- Meeting regulatory obligations.

These are all serious matters that, when unaddressed, can undermine or completely derail any organisation’s AI initiatives and turn this innovation from a game-changing asset to a potential liability.

This is where responsible AI comes into the picture, providing organisations with a template to unlock real value from AI and yield its many benefits.

“In order to take advantage of the very real benefits that AI can pose and bring to organisations, you have to adopt AI in a responsible way,” emphasised Christina Montgomery, Chief Privacy & Trust Officer at IBM, as she discussed IBM’s responsible AI initiative at the same IBM ASEAN AI Masterclass: Future of Responsible AI & Governance in ASEAN virtual event.

The question now is: Where does one even start?

A Trustworthy Policy Is Key for Responsible AI

IBM might have the answer within its core policy framework, encapsulated by three pivotal pillars:

- Regulate AI risks, not the AI algorithms. IBM proposes regulating the context in which AI is deployed, making sure to regulate high-risk use cases of AI (as in the case of deepfakes, for example).

- Make AI creators and deployers accountable, not immune to liability. People creating and deploying AI need to be held accountable for the way they develop and use AI.

- Support open AI innovation, not an AI licencing regime. Promoting an AI licencing regime will not only inhibit open innovation but can also result in regulatory capture of some sort.

This three-pronged policy is a solid foundation companies can use to advance responsible AI—and the time to act is now, according to Christina, who pointed to deepfakes as one of the most pressing challenges posed by generative AI, so much so that they can actually compromise the integrity of elections, harm reputations, and spread fake news.

“The time is now to focus on AI safety and to focus on governance because generative AI has introduced some new and amplified existing risks associated with AI,” Christina pointed out. “At the same time, it has become very clear that AI is going to offer significant benefits. Balancing those benefits with the potential risks is really important now, more than ever, because of the rise of generative AI and the new risks we talked about, like deepfakes and content that can be created to harm people and generate more misinformation and disinformation.”

Again, IBM is taking an active role in this critical push for responsible AI, with Christina making clear that “every IBMer in the company is responsible for trustworthy AI” and that its mission is “to instil a culture of trustworthy AI throughout the company”—and cascade it to its clients.

“We are essentially leveraging our privacy program in something we’re calling integrated governance program,” Christina said. “There’s a complexity of emerging regulatory obligations, but across all of those, there’s an emerging set of high-level requirements: Things like having a risk management system, making sure you’re vetting your data, having transparency in the data that’s being used to train models, lifecycle governance and model management, and disclosing what data was used to train the models. We are essentially distilling all that to an AI baseline and applying a continuous compliance approach through our program to help IBM and our clients to comply with regulations and also to adopt trustworthy AI.”

Many a Happy Returns: The Benefits of Trustworthy AI Are Worth All the Trouble

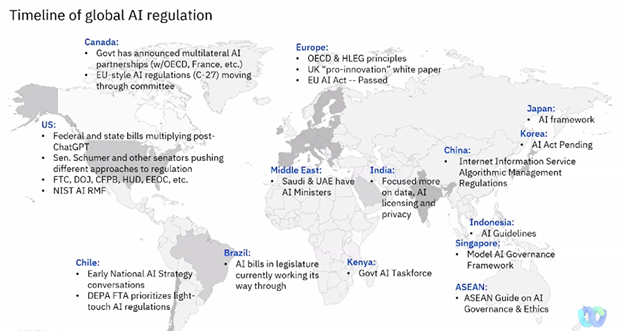

So, you are complying with regulations—something already established in various countries worldwide. More are expected to follow as well, according to Stephen Braim, Vice President, Government and Regulatory Affairs, Asia Pacific, at IBM, who noted in the same Masterclass that “there’s a lot of work going on how to regulate AI.”

You are also making AI that is trustworthy. What’s in it for you then?

According to Christina, the benefits of responsible, trustworthy AI are compelling, so much so that 75% of executives view it as a competitive differentiator. Beyond that, trustworthy AI can:

- Build for an organisation a sound data and AI foundation that is compliant with regulations.

- Improve AI adoption rate and the ability to operationalise it.

- Enable higher returns on investment.

- Build and retain investor confidence in AI.

- Reduce AI-related risks and frequency of failure.

- Attract and retain both talents and customers, gain public trust and sympathy.

- Win over customers who place a premium on values and ESG commitment.

All these benefits, though, circle back to that big, bold tenet of trust: Do people trust AI well enough for them to adopt and support it fully?

“The biggest fear of governments and AI regulators is that they’re going to put all this work and no one’s going to adopt it, and that all comes back to what is the role of government in promoting trust and in promoting these [AI] applications for societal benefit. So, if you can’t get the trust equation right, you’re not going to get the adoption equation right.

In other words, gain people’s trust, and you gain their support. You gain even greater adoption. This is why IBM, according to Stephen, believes that in the era of generative AI, user trust is more essential than ever.

It is particularly true in ASEAN, which Stephen describes as having a demographic that is unique for AI adoption due to its young population, high IT adoption, and strong innovation focus. Corollary, AI matters in ASEAN, says Stephen, noting how it can be a major platform for digital transformation, drive productivity and economic growth, and transform industries like healthcare, education, and tourism.

Of course, AI’s far-reaching impact is undeniable and well-documented. What is left now, it appears, is to ensure that organisations—in the public and private sectors—are making AI responsible and trustworthy.

It is easier said than done, but IBM has laid the groundwork for it. Perhaps it is time for others to follow suit.

(0)

(0) (0)

(0)Archive

- October 2024(44)

- September 2024(94)

- August 2024(100)

- July 2024(99)

- June 2024(126)

- May 2024(155)

- April 2024(123)

- March 2024(112)

- February 2024(109)

- January 2024(95)

- December 2023(56)

- November 2023(86)

- October 2023(97)

- September 2023(89)

- August 2023(101)

- July 2023(104)

- June 2023(113)

- May 2023(103)

- April 2023(93)

- March 2023(129)

- February 2023(77)

- January 2023(91)

- December 2022(90)

- November 2022(125)

- October 2022(117)

- September 2022(137)

- August 2022(119)

- July 2022(99)

- June 2022(128)

- May 2022(112)

- April 2022(108)

- March 2022(121)

- February 2022(93)

- January 2022(110)

- December 2021(92)

- November 2021(107)

- October 2021(101)

- September 2021(81)

- August 2021(74)

- July 2021(78)

- June 2021(92)

- May 2021(67)

- April 2021(79)

- March 2021(79)

- February 2021(58)

- January 2021(55)

- December 2020(56)

- November 2020(59)

- October 2020(78)

- September 2020(72)

- August 2020(64)

- July 2020(71)

- June 2020(74)

- May 2020(50)

- April 2020(71)

- March 2020(71)

- February 2020(58)

- January 2020(62)

- December 2019(57)

- November 2019(64)

- October 2019(25)

- September 2019(24)

- August 2019(14)

- July 2019(23)

- June 2019(54)

- May 2019(82)

- April 2019(76)

- March 2019(71)

- February 2019(67)

- January 2019(75)

- December 2018(44)

- November 2018(47)

- October 2018(74)

- September 2018(54)

- August 2018(61)

- July 2018(72)

- June 2018(62)

- May 2018(62)

- April 2018(73)

- March 2018(76)

- February 2018(8)

- January 2018(7)

- December 2017(6)

- November 2017(8)

- October 2017(3)

- September 2017(4)

- August 2017(4)

- July 2017(2)

- June 2017(5)

- May 2017(6)

- April 2017(11)

- March 2017(8)

- February 2017(16)

- January 2017(10)

- December 2016(12)

- November 2016(20)

- October 2016(7)

- September 2016(102)

- August 2016(168)

- July 2016(141)

- June 2016(149)

- May 2016(117)

- April 2016(59)

- March 2016(85)

- February 2016(153)

- December 2015(150)